Your blogger is about to vanish, returning in the new year. Many thanks for your past, present, and future support.

If you're at ASSA Atlanta, I hope you'll come to the Penn Economics and Finance parties.

Participants

Adusumilli

|

||||

Althoff

|

Lukas

|

Princeton

|

||

Anderson

|

Rachel

|

Princeton

|

||

Bai

|

Jushan

|

Columbia

|

||

Beresteanu

|

Arie

|

Pitt

|

||

Callaway

|

Brantly

|

Temple

|

||

Chao

|

John

|

Maryland

|

||

Cheng

|

Xu

|

UPenn

|

||

Choi

|

Jungjun

|

Rutgers

|

||

Choi

|

Sung Hoon

|

Rutgers

|

||

Cox

|

Gregory

|

Columbia

|

||

Christensen

|

Timothy

|

NYU

|

||

Diebold

|

Frank

|

UPenn

|

||

Dou

|

Liyu

|

Princeton

|

||

Gao

|

Wayne

|

Yale

|

||

Gaurav

|

Abhishek

|

Princeton

|

||

Henry

|

Marc

|

Penn State

|

||

Ho

|

Paul

|

Princeton

|

||

Honoré

|

Bo

|

Princeton

|

||

Hu

|

Yingyao

|

Johns Hopkins

|

||

Kolesar

|

Michal

|

Princeton

|

||

Lazzati

|

Natalia

|

UCSC

|

||

Lee

|

Simon

|

Columbia

|

||

Li

|

Dake

|

Princeton

|

||

Li

|

Lixiong

|

Penn State

|

||

Liao

|

Yuan

|

Rutgers

|

||

Menzel

|

Konrad

|

NYU

|

||

Molinari

|

Francesca

|

Cornell

|

||

Montiel Olea

|

José Luis

|

Columbia

|

||

Müller

|

Ulrich

|

Princeton

|

||

Norets

|

Andriy

|

Brown

|

||

Plagborg-Møller

|

Mikkel

|

Princeton

|

||

Poirier

|

Alexandre

|

Georgetown

|

||

Quah

|

John

|

Johns Hopkins

|

||

Rust

|

John

|

Georgetown

|

||

Schorfheide

|

Frank

|

UPenn

|

||

Seo

|

Myung

|

SNU & Cowles

|

||

Shin

|

Youngki

|

McMaster

|

||

Sims

|

Christopher

|

Princeton

|

||

Spady

|

Richard

|

Johns Hopkins

|

||

Stoye

|

Jörg

|

Cornell

|

||

Taylor

|

Larry

|

Lehigh

|

||

Vinod

|

Hrishikesh

|

Fordham

|

||

Wang

|

Yulong

|

Syracuse

|

||

Yang

|

Xiye

|

Rutgers

|

||

Zeleneev

|

Andrei

|

Princeton

|

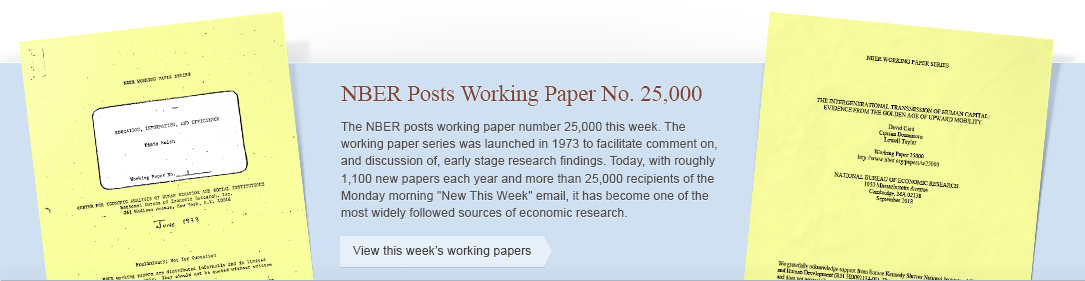

This morning's "New this Week" email included the release of the 25000th NBER working paper, a study of the intergenerational transmission of human capital by David Card, Ciprian Domnisoru, and Lowell Taylor. The NBER working paper series was launched in 1973, at the inspiration of Robert Michael, who sought a way for NBER-affiliated researchers to share their findings and obtain feedback prior to publication. The first working paper was "Education, Information, and Efficiency" by Finis Welch. The design for the working papers -- which many will recall appeared with yellow covers in the pre-digital age -- was created by H. Irving Forman, the NBER's long-serving chart-maker and graphic artist.

Initially there were only a few dozen working papers per year, but as the number of NBER-affiliated researchers grew, particularly after Martin Feldstein became NBER president in 1977, the NBER working paper series also expanded. In recent years, there have been about 1150 papers per year. Over the 45 year history of the working paper series, the Economic Fluctuations and Growth Program has accounted for nearly twenty percent (4916) of the papers, closely followed by Labor Studies (4891) and Public Economics (4877).

Banque de France has been for many years at the forefront of disseminating statistical data to academics and other interested parties. Through Banque de France’s dedicated public portal http://webstat.banque-france.fr/en/, we offer a large set of free downloadable series (about 40 000 mainly aggregated series).

Banque de France has expanded further the service provided and launched, in Paris, in November 2016 an “Open Data Room”, providing researchers with a free access to granular data.

We are glad to announce that the “Open Data Room” service is now also available to US researchers through Banque de France Representative Office in New York City.